Memory Barriers are used to enforce some kind of ordering on how the compiler or CPU execute memory ordering operations. Since most CPUs have some form of technology which is used to optimize the execution of code, the problem of out-of-order execution can occur in programs leading to unpredictable results. Each variable or data structure we create is referred to as a an object, and each object has a memory address and memory address load order. This load order defines which variables are written to and loaded first.

You probably will never notice this with program which has one thread, typically this is simply main. However, if you create two threads and have these two threads run concurrently, the question of which thread runs first raises? Although, we can add synchronization dispatcher objects to our code, the point of this blog post is to look at the concept of memory barriers and examine the Assembly code which is produced through binary analysis.

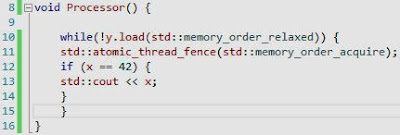

In the Main thread, I've created a global atomic boolean value called y, and have set it to false. Two separate additional threads are created, which have functions called Processor and Processor2.

The code for the Processor function is above, while y is not being read, we need to wait until the fence instruction has created a synchronization with relationship with the fence instruction in the other thread. When using fence instructions, there must be two different types of fence instructions (store and load) to generate a relationship otherwise we will not achieve the purpose of our synchronization.

The code for the Processor2 function, shows that the address of x will be stored or written with the value of 42. Again, x is a global variable, and is thus available to all parts of the program. We then insert a fence instruction between the storing of x and the y, this is used to ensure that the value of y isn't read until the memory fence has been acquired in the other thread.

If the fence instruction notices the store after the release fence instruction, then the acquire fence instruction will form a synchronize with relationship with that release fence instruction.

It's easier to understand with the insertion of a assert function to our code in the the Main thread. The assert function will be controlled by the value of a global atomic variable called z. If this function is incremented by 1, then the assert function will not be called.

Once the two threads have synchronized together, the value of x will be evaluated and then the value of z will be incremented to 1, ensuring that the assert function isn't called and terminates the program.

You may have heard or seen people talk about the use of the volatile keyword. This keyword is used to tell the hardware that it can modify this value, and this brings in the term of modification ordering. The use of the volatile keyword will alter the modification ordering of executed instructions. See the references section for more information.

Memory Barriers Internals

I assume you know generally how the cache works, and what cache hits and misses are. In this case, I'm going to discuss some of the features of the cache and then explain how memory barriers relate to the operation of the CPU Cache.

The above is the general structure of the cache, and its relationship to memory. Initially, each there will be a cache miss, since no longer is present within the cache, however, once this data has been gathered from memory and placed into the cache, a cache hit will occur for each sequent read on that same object.

Although, the cache is a limited resource and therefore will not hold every possible address of all the objects being read from memory. When the cache becomes full, and the processor is attempting to write new data to the the cache, then cache capacity misses will result. These cache misses, as the name suggests, are a result of lack of space within the cache.

The cache is organized as hash table with fixed-size buckets called sets, and no chaining. The hash table is a data structure used to implement and construct a associative array, each hash function will be able to match a key to the correct value within the array. Essentially, the key is used to index into the bucket stored in the hash table. The key would the name of our object.

Looking at the above example of a cache, we can see there is currently 16 different buckets or sets. The hash function is based upon a algorithm which knows the name of the key and the size of the array. Furthermore, hash tables used with the cache do not support chaining, and therefore hash collisions are always possible. Separate Chaining is the concept that a index can have multiple entries in a linked list, this avoids the problem of hash collisions.

When the cache has become full, the data within one of the sets will need to be removed, and the new the data will need to be added. On the other hand, when data is being wrote by a different processor to another processor's cache, the operation will result in unnecessary stalling of hundreds of instructions.

The CPU 0 sends a message to CPU 1's cache line, the invalidate message contains the physical address of the cache line, and causes the processor to remove the data, to enable CPU 0 to be able to write to that set within the cache. As you can see, this is a expensive operation and there is a better method. The answer lies with Store Buffers, whereby a processor can store it's write instructions within it's buffer, and continue executing until it's appropriate to make the write to the other cache, but this leads to the problems of memory ordering and out-of-order execution.

Due to the problem of memory ordering and store buffers, memory barriers were introduced to enable programmers to be able to perform synchronization at level which was close to the hardware of the system.

Memory Barriers Disassembly

The main thread of the program begins at mainCRTStartup, which jumps to a block of code which contains the necessary thread creation functions. By jumping to the location pointed to by the jump instruction, we can see how our thread is started. Please note all default compiler linker options are enabled, so you may notice things like security cookies etc.

We push some integer variable onto the stack stored in the register called ebp, this register value is then moved into the esp register, which is our current stack frame pointer. We then call some functions, and remove the value of the ebp register from the stack. The function then returns.

Most of the fence instructions, and thread functions I was able to find, we can jump to where these instructions begin, and examine the Assembly code used to produce them.

Let's look at the jump instruction for the loading of a fence instruction (std::atomic_load_explicit), and have a quick look at a few of the details.

We can see that function takes the atomic variable and the memory ordering constant, which are later pushed onto the stack.

volatile (C++)

std::memory_order

Hash Table

Memory Barriers : a Hardware View for Software Hackers

Multiprocessor Considerations for Kernel-Mode Drivers

No comments:

Post a Comment