- VTables and Virtual Functions

- Thread Storage Slots

- I/O Completion Ports

- IRP Queues

- PE Header Sections

- Registry Internals

- URBs and USB Internals

Thursday 30 January 2014

February: Blog Post List

This is hopefully going to be the upcoming blog posts for February:

Wednesday 29 January 2014

Types of Page Faults

This blog post will expand upon the idea of Page Faults, which resolve problems with Virtual to Physical Address Translation, and take a look at the different kinds of Page Faults which can happen.

Collided Page Faults

Collided Page Faults are common on modern systems which have multiple threads. A Collided Page Fault is a situation whereby a thread within the same process or from a different process, causes a Page Fault on a page which is already paged-in by another thread of the same process or a different thread from a different process as mentioned before.

Before reading the rest of this section, the concept of Transition will apply to the PFN Database and not to PTEs and Prototype PTEs. Transition in terms of the PFN Database, will mean that the page isn't in a working set list or present on any paging lists, therefore the page will not be owned by anyone and will be in Transition when a I/O operation is being performed on it.

I haven't been able to find the correct symbols for the Transition Page List Head, and therefore won't be able to apply the _MMPFNLIST data structure to it. The symbol is most likely only accessible with Private symbols.

The ListName member is a enumeration which contains a list of all the PFN list types.

The Pager knows if the page is being read by checking the ReadInProgress bit is set in the _MMPFNENTRY data structure.

From here, the Pager may issue a Event dispatcher object (thread which created the fault owns the Event object) to cause other threads to wait, or alternatively it may create a parallel I/O operation to prevent deadlocks occurring in the filesystem, which will cause two threads to compete against each other, with the thread which is able to complete the I/O operation first being able to resolve the Page Fault.

Once the I/O has completeted, the ReadInProgress bit will be cleared and the PTE will be updated to point to the physical page. Any other threads which wish to obtain the PFN Database lock as seen in the _MMPFNLIST data structure, will check to see if any previous I/O operations completed successfully by checking the InPageError bit.

Clustered Page Faults

Clustered Page Faults are designed for performance, rather to resolve problems like deadlocks with the file system mechanisms being used. Clustered Page Faults rely upon placing large numbers of pages into the page cache, rather than the system working set in order to reduce the consumption of the virtual address space, and to avoid the limiting factor of the virtual address space size for the given system.

Furthermore, any pages which will be reused, do not have to invalidated within the TLB Cache with IPI's or flushing and rewriting of the CR3 register. Any PTE's which had mapping, will have the Transition bit set and placed onto a Standby list.

If the page is then referenced by a process, it is added to the working set of that process, however, if the page is never referenced it will simply remain within the Page Cache. Any pages which are present in the Page Cache and physical memory, will be represented with a dummy page. With Page Clustering, if two pages are referenced within the same cluster, then the Memory Manager will issue a single I/O operation on the two pages, instead of two read operations to increase performance.

The other pages which are already present in physical memory will point to a systemwide dummy page.

The Size field indicates the size of the MDL data structure and the size of the array of PFN entries for the resident pages.

Collided Page Faults

Collided Page Faults are common on modern systems which have multiple threads. A Collided Page Fault is a situation whereby a thread within the same process or from a different process, causes a Page Fault on a page which is already paged-in by another thread of the same process or a different thread from a different process as mentioned before.

Before reading the rest of this section, the concept of Transition will apply to the PFN Database and not to PTEs and Prototype PTEs. Transition in terms of the PFN Database, will mean that the page isn't in a working set list or present on any paging lists, therefore the page will not be owned by anyone and will be in Transition when a I/O operation is being performed on it.

I haven't been able to find the correct symbols for the Transition Page List Head, and therefore won't be able to apply the _MMPFNLIST data structure to it. The symbol is most likely only accessible with Private symbols.

The ListName member is a enumeration which contains a list of all the PFN list types.

The Pager knows if the page is being read by checking the ReadInProgress bit is set in the _MMPFNENTRY data structure.

From here, the Pager may issue a Event dispatcher object (thread which created the fault owns the Event object) to cause other threads to wait, or alternatively it may create a parallel I/O operation to prevent deadlocks occurring in the filesystem, which will cause two threads to compete against each other, with the thread which is able to complete the I/O operation first being able to resolve the Page Fault.

Once the I/O has completeted, the ReadInProgress bit will be cleared and the PTE will be updated to point to the physical page. Any other threads which wish to obtain the PFN Database lock as seen in the _MMPFNLIST data structure, will check to see if any previous I/O operations completed successfully by checking the InPageError bit.

Clustered Page Faults

Clustered Page Faults are designed for performance, rather to resolve problems like deadlocks with the file system mechanisms being used. Clustered Page Faults rely upon placing large numbers of pages into the page cache, rather than the system working set in order to reduce the consumption of the virtual address space, and to avoid the limiting factor of the virtual address space size for the given system.

Furthermore, any pages which will be reused, do not have to invalidated within the TLB Cache with IPI's or flushing and rewriting of the CR3 register. Any PTE's which had mapping, will have the Transition bit set and placed onto a Standby list.

If the page is then referenced by a process, it is added to the working set of that process, however, if the page is never referenced it will simply remain within the Page Cache. Any pages which are present in the Page Cache and physical memory, will be represented with a dummy page. With Page Clustering, if two pages are referenced within the same cluster, then the Memory Manager will issue a single I/O operation on the two pages, instead of two read operations to increase performance.

The other pages which are already present in physical memory will point to a systemwide dummy page.

The Size field indicates the size of the MDL data structure and the size of the array of PFN entries for the resident pages.

Monday 27 January 2014

Rootkits: Direct Kernel Object Manipulation and Processes

DKOM is one of the methods commonly used and implemented by Rootkits, in order to remain undetected, since this the main purpose of a roottkit. To be able to access Kernel-Mode code and data structures without detection from security programs or tools used by security analysts and researchers. Rootkits are probably less of a problem than they used to be, with most rootkit detection tools being able to find all the variations of a rootkit, unless of course others are produced. Rootkits are able to steal information and hide other directories and files to remain undetected.

Usually, all objects are managed by the Object Manager, however, with DKOM, this technique completely bypasses the Object Manager, making it harder for rootkits to be detected. DKOM can also be used to modify the privilege level of a thread, hide processes and ports, and hide device drivers.

Rootkits will commonly check the operating system version to be able to adapt to the environment in which it is running in. You can view the operating system version with the vertarget command in WinDbg.

As we should know, Processes are represented as a object with a _EPROCESS data structure. The diagram below shows us the general overview of how processes are managed by the operating system. I'll apply this diagram to WinDbg.

The most important part of the diagram is the linked list of Processes and the pointers within the doubly linked list to connect each process together.

The Processor Region Control Block contains the address of the current running thread, the next thread scheduled to run and the currently idle thread. We can use the dt command with _KPRCB data structure or use the !prcb extension to view this information.

Each thread is represented by a _ETHREAD data structure, which contains the _KTHREAD data structure for the same thread.

We can then view the _KTHREAD data structure using the same WinDbg command.

Looking at the _EPROCESS data structure, you will notice a field called ActiveProcessLinks, and a pointer to the structure _LIST_ENTRY will represents a doubly linked list.

Since ActiveProcessLinks is a doubly linked list, it must have a Head. This head can be found by checking the PsActiveProcessHead symbol. Instead of using the dt command, you can use the dl command to display doubly and singly linked list in WinDbg.

The first entry should point to the starting process, which is always the System Process. We can check this is correct, by displaying the PsInitialSystemProcess global variable which points to the address of the _EPROCESS data structure for the System process.

Rootkits then take advantage of this linked list structure by modifying the pointers within the linked list through DKOM, and changing the Flink and Blink pointers to wrap around the process in which should be hidden.

These types of rookits can be detected by checking the handle table for threads which do not appear to have any processes, or checking the thread scheduler list. The Handle Table for a Process can be found by looking within the _EPROCESS data structure, and then examining the ObjectTable field which contains a pointer to the _HANDLE_TABLE data structure.

The HandleTableList field within the _HANDLE_TABLE data structure contains the doubly linked list of all the connected Handle Tables for each process.

We can use the !list extension to transverse through the elements stored within the doubly linked list, and display each element.

References:

Rootkits: Subverting the Windows Kernel

Ring of Fire: Rookits and DKOM

When Malware Meets Rookits - Symantec

Windows Memory Forensics and DKOM - Jesse Kornblum

Hidden Processes - The Implication for Intrusion Detection

Usually, all objects are managed by the Object Manager, however, with DKOM, this technique completely bypasses the Object Manager, making it harder for rootkits to be detected. DKOM can also be used to modify the privilege level of a thread, hide processes and ports, and hide device drivers.

Rootkits will commonly check the operating system version to be able to adapt to the environment in which it is running in. You can view the operating system version with the vertarget command in WinDbg.

The most important part of the diagram is the linked list of Processes and the pointers within the doubly linked list to connect each process together.

The Processor Region Control Block contains the address of the current running thread, the next thread scheduled to run and the currently idle thread. We can use the dt command with _KPRCB data structure or use the !prcb extension to view this information.

Each thread is represented by a _ETHREAD data structure, which contains the _KTHREAD data structure for the same thread.

We can then view the _KTHREAD data structure using the same WinDbg command.

Since ActiveProcessLinks is a doubly linked list, it must have a Head. This head can be found by checking the PsActiveProcessHead symbol. Instead of using the dt command, you can use the dl command to display doubly and singly linked list in WinDbg.

The first entry should point to the starting process, which is always the System Process. We can check this is correct, by displaying the PsInitialSystemProcess global variable which points to the address of the _EPROCESS data structure for the System process.

Rootkits then take advantage of this linked list structure by modifying the pointers within the linked list through DKOM, and changing the Flink and Blink pointers to wrap around the process in which should be hidden.

The Blink and Flink pointers of the two processes will need to point to each other, in order to remain valid within the linked list, otherwise BSODs will result when PspExitProcess is called to terminate a process. You can determine if a linked list is valid with the !validatelist extension.

These types of rookits can be detected by checking the handle table for threads which do not appear to have any processes, or checking the thread scheduler list. The Handle Table for a Process can be found by looking within the _EPROCESS data structure, and then examining the ObjectTable field which contains a pointer to the _HANDLE_TABLE data structure.

The HandleTableList field within the _HANDLE_TABLE data structure contains the doubly linked list of all the connected Handle Tables for each process.

We can use the !list extension to transverse through the elements stored within the doubly linked list, and display each element.

References:

Rootkits: Subverting the Windows Kernel

Ring of Fire: Rookits and DKOM

When Malware Meets Rookits - Symantec

Windows Memory Forensics and DKOM - Jesse Kornblum

Hidden Processes - The Implication for Intrusion Detection

Thursday 23 January 2014

List of WHEA Data Structures

I've listed other WHEA data structures in my other blog posts, and therefore will not be listing the same ones here. The purpose of this blog post is to list the WHEA data structures available with WinDbg, and Microsoft's Public Symbol Server. The information within the structures has more or less been explained in my other WHEA posts, but if in doubt please leave a comment or read the WDK documentation.

_WHEA_ERROR_STATUS

_WHEA_ERROR_RECORD_HEADER_FLAGS

_WHEA_ERROR_PACKET_V2

_WHEA_ERROR_PACKET_FLAGS

_WHEA_ERROR_TYPE

_WHEA_ERROR_SEVERITY

_WHEA_ERROR_SOURCE_TYPE

_WHEA_ERROR_PACKET_DATA_FORMAT

_WHEA_ERROR_RECORD

_WHEA_ERROR_RECORD_HEADER

_WHEA_ERROR_RECORD_SECTION_DESCRIPTOR

_WHEA_REVISION

_WHEA_ERROR_RECORD_SECTION_DESCRIPTOR_VALIDBITS

_WHEA_ERROR_RECORD_SECTION_DESCRIPTOR_FLAGS

_WHEA_ERROR_STATUS

_WHEA_ERROR_RECORD_HEADER_FLAGS

_WHEA_ERROR_PACKET_V2

_WHEA_ERROR_PACKET_FLAGS

_WHEA_ERROR_TYPE

_WHEA_ERROR_SEVERITY

_WHEA_ERROR_SOURCE_TYPE

_WHEA_ERROR_PACKET_DATA_FORMAT

_WHEA_ERROR_RECORD

_WHEA_ERROR_RECORD_HEADER

_WHEA_ERROR_RECORD_SECTION_DESCRIPTOR

_WHEA_REVISION

_WHEA_ERROR_RECORD_SECTION_DESCRIPTOR_VALIDBITS

_WHEA_ERROR_RECORD_SECTION_DESCRIPTOR_FLAGS

Understanding PCI Configuration Space

I noticed in a dump file I was debugging for a user on Sysnative Forums, within the call stack there was a few references to PCI Configuration Space. The PCI Configuration Space can be accessed by device drivers and other programs which use software drivers to gather additional information. The call stack in the example was easy to find a possible cause, however, the topic of this discussion will be explaining the PCI Configuration Space.

The driver in question belongs to CPU-Z.

PCI Configuration Space

The PCI Configuration Space is a set of registers, on PCI Express (PCIe) buses, this configuration space may be referred to as the the Extended Configuration Space. These registers are then mapped to memory locations such as the I/O Address Space of the CPU.

The Configuration Space is typically 256 bytes, and can be accessed with Read/Write Configuration Cycles. The target device for the Configuration Space Access is selected with the Initialization Device Select (IDSEL) signal, which is then decoded by the target device. Although, in order to make the PCI Configuration Space accessible (most CPUs do not support such access), there must be kind of mechanism, which can allow access to the PCI Configuration Space. This mechanism is divided into two parts: Mechanism #1 and Mechanism #2. Mechanism #2 is only provided for backwards compatibility, and therefore will not be part of the discussion later on.

The Configuration Space can be accessed by drivers with a set IRPs, or by accessing members within the BUS_INTERFACE_STANDARD data structure.

The IRP_MN_WRITE_CONFIG is used to to write data to the configuration space specified. The IRP is a Minor Function Code for a PnP IRP. Since the buffer containing the information to be written is allocated from paged pool, the bus driver must call this IRP at IRQL lower than DISPATCH_LEVEL.

The IRP_MN_READ_CONFIG is used to read data from the configuration space. This is IRP is also the Minor Function Code for a PnP IRP, and also must be called at IRQL level lower than DISPATCH_LEVEL for the same reasons above.

I mentioned earlier about the two types of Mechanisms used to access the Configuration Space. With the newer mechanism, two 32-bit I/O registers are created, one is called CONFIG_ADDRESS (0xCF8) and is used to provide the configuration address to be accessed, whereas, the second is named CONFIG_DATA and is used to transfer data to and from the CONFIG_DATA register.

The structure of the CONFIG_ADDRESS register can be found below:

Bit 31 is used to enable the translation of accesses to CONFIG_DATA to configuration cycles. These cycles are simply addresses on the PCI/PCIe bus. These Configuration Cycles are divided into two types: Type 1 and Type 2, and will be discussed later on.

The Bits 23-16 are used to serve as a Bus Number, to be able to enable the system to select a specific PCI bus. The Bits 15-11 are used to serve as a Device Number, to select a device connected to the PCI bus. Bits 10-8 are used for selection of PCI functions (if more than one), which are in simple terms logical devices for a certain device. The remaining bits mainly depend upon alignment requirements, but the last two bits must be 0, since all reads and writes have to be 32-bits long.

We haven't discussed one of the more fundamental aspects of the Configuration Space, which is the Configuration Space Header. The diagram below shows the structure of the said header.

The Configuration Space Header provides information to enable the operating system to interact and control the device. There is currently two different Header Types, which are Type 1 for bridges, switches and root complexes. Type 0 for Endpoints. The above example is for Endpoints.

The Interrupt Pin and Interrupt Lines are used with IRQs, IDT and IRQL Levels. Each PCI device typically has four pins which are A, B, C and D. Each pin is used to enable the hardware to interrupt the processor, using the Interrupt Line, which is used to map to a ISR within the IDT, so the appropriate ISR routine can be handled the device driver code.

The Device ID and Vendor ID are used to identify the device connected to the PCI bus. We can use these values in a PCI Database to find the manufacturer of the device.

The Subsystem Vendor ID (SVID) and Subsystem ID (SSID) provide model specific device information.

The Command and Status registers are used to provide feature and error reporting information which I have discussed in my Stop 0x124 PCIe Debugging Posts.

The BIST register is set by changing Bit 6 to 1, and then performing a Built-In Self Test.

We can view the Configuration Space for a certain device with WinDbg, which I will show later in the WinDbg Extensions section of this post. I'll explain Base Address Registers, Class Codes and Bus Enumeration there too.

Call Stack Functions

I've managed to find some code on ReactOS, which may explain some of the internal PCI functions seen in the call stack of the dump file.

HalpPCIConfig seems to perform some form of synchronization, and then begins performing a read/write operation with the specified buffer.

The rest of the documentation can be found here.

WinDbg Extensions

I've managed to find two extensions which could provide additional information with our debugging efforts. These extensions are !pcitree and !pci. Please note in order to use !pci, you will need a Live Debugging sessions with either the local computer or do it remotely. I enabled a Live Debugging session on my computer to provide you with an example.

The !pcitree extensions shows all the buses and the devices attached to these buses. Using the information provided with the !devext extension, the other fields provided by the !pcitree extension will become more apparent.

The d field is the Device Number, the f field is the Function Number. We can see the Interrupt Line number, the Vendor and Device information, Header Type and Class Information.

The !pci extension with the 0x100 flag shows the information stored within the PCI Configuration Space, please note this is a omitted output of one particular PCI bus.

There's three key points I would like to discuss in this section. BARs, Classes and Bus Enumeration. I will start with Bus Enumeration which we can obtain with the !pcitree extension.

There are three different methods of Bus Enumeration, which will described shortly. Bus Enumeration is a method of checking any devices are connected to the PCI bus. This is required since the BIOS and the operating system do not have any method of their own to be able to check if a device is connected. Bus Enumeration is performed by checking the Vendor ID and Device ID Registers, and then returning F's (Hexadecimal) and 1's (Binary) if the reading of the Function and Register fail.

Now, let's briefly look at the concept of BARs or (Base Address Registers). BARs are used to define the memory addresses and I/O port addresses (memory-mapped I/O) used by the PCI devices. These registers define the size of memory to be allocated, and where it can be allocated. Typically, Memory BARs must be in physical memory, whereas, I/O BARs do not have this restriction.

0x02 in the Type field means the address is 32-bit, 0x00 means the address is 64-bit and 0x01 is Reserved.

The last concept I'm going to discuss involves Classes. Classes are separated into Class Code, Sub Class Code and Prog IF (Programmable Interface) and are used to identify the type of device and it's potential functions.

There a larger number of Sub Classes and Functions, so I'll have to omit the complete listing, and refer you to the PCI OSDev Wiki article.

Additional Reading

PCI - OSDev Wiki

PCI Configuration Space

PCI Internals

OSR NtDev List - PCI Function Number

The driver in question belongs to CPU-Z.

PCI Configuration Space

The PCI Configuration Space is a set of registers, on PCI Express (PCIe) buses, this configuration space may be referred to as the the Extended Configuration Space. These registers are then mapped to memory locations such as the I/O Address Space of the CPU.

The Configuration Space is typically 256 bytes, and can be accessed with Read/Write Configuration Cycles. The target device for the Configuration Space Access is selected with the Initialization Device Select (IDSEL) signal, which is then decoded by the target device. Although, in order to make the PCI Configuration Space accessible (most CPUs do not support such access), there must be kind of mechanism, which can allow access to the PCI Configuration Space. This mechanism is divided into two parts: Mechanism #1 and Mechanism #2. Mechanism #2 is only provided for backwards compatibility, and therefore will not be part of the discussion later on.

The Configuration Space can be accessed by drivers with a set IRPs, or by accessing members within the BUS_INTERFACE_STANDARD data structure.

The IRP_MN_WRITE_CONFIG is used to to write data to the configuration space specified. The IRP is a Minor Function Code for a PnP IRP. Since the buffer containing the information to be written is allocated from paged pool, the bus driver must call this IRP at IRQL lower than DISPATCH_LEVEL.

The IRP_MN_READ_CONFIG is used to read data from the configuration space. This is IRP is also the Minor Function Code for a PnP IRP, and also must be called at IRQL level lower than DISPATCH_LEVEL for the same reasons above.

I mentioned earlier about the two types of Mechanisms used to access the Configuration Space. With the newer mechanism, two 32-bit I/O registers are created, one is called CONFIG_ADDRESS (0xCF8) and is used to provide the configuration address to be accessed, whereas, the second is named CONFIG_DATA and is used to transfer data to and from the CONFIG_DATA register.

The structure of the CONFIG_ADDRESS register can be found below:

Bit 31 is used to enable the translation of accesses to CONFIG_DATA to configuration cycles. These cycles are simply addresses on the PCI/PCIe bus. These Configuration Cycles are divided into two types: Type 1 and Type 2, and will be discussed later on.

The Bits 23-16 are used to serve as a Bus Number, to be able to enable the system to select a specific PCI bus. The Bits 15-11 are used to serve as a Device Number, to select a device connected to the PCI bus. Bits 10-8 are used for selection of PCI functions (if more than one), which are in simple terms logical devices for a certain device. The remaining bits mainly depend upon alignment requirements, but the last two bits must be 0, since all reads and writes have to be 32-bits long.

We haven't discussed one of the more fundamental aspects of the Configuration Space, which is the Configuration Space Header. The diagram below shows the structure of the said header.

The Configuration Space Header provides information to enable the operating system to interact and control the device. There is currently two different Header Types, which are Type 1 for bridges, switches and root complexes. Type 0 for Endpoints. The above example is for Endpoints.

The Interrupt Pin and Interrupt Lines are used with IRQs, IDT and IRQL Levels. Each PCI device typically has four pins which are A, B, C and D. Each pin is used to enable the hardware to interrupt the processor, using the Interrupt Line, which is used to map to a ISR within the IDT, so the appropriate ISR routine can be handled the device driver code.

The Device ID and Vendor ID are used to identify the device connected to the PCI bus. We can use these values in a PCI Database to find the manufacturer of the device.

The Subsystem Vendor ID (SVID) and Subsystem ID (SSID) provide model specific device information.

The Command and Status registers are used to provide feature and error reporting information which I have discussed in my Stop 0x124 PCIe Debugging Posts.

The BIST register is set by changing Bit 6 to 1, and then performing a Built-In Self Test.

We can view the Configuration Space for a certain device with WinDbg, which I will show later in the WinDbg Extensions section of this post. I'll explain Base Address Registers, Class Codes and Bus Enumeration there too.

Call Stack Functions

I've managed to find some code on ReactOS, which may explain some of the internal PCI functions seen in the call stack of the dump file.

HalpPCIConfig seems to perform some form of synchronization, and then begins performing a read/write operation with the specified buffer.

The rest of the documentation can be found here.

WinDbg Extensions

I've managed to find two extensions which could provide additional information with our debugging efforts. These extensions are !pcitree and !pci. Please note in order to use !pci, you will need a Live Debugging sessions with either the local computer or do it remotely. I enabled a Live Debugging session on my computer to provide you with an example.

The d field is the Device Number, the f field is the Function Number. We can see the Interrupt Line number, the Vendor and Device information, Header Type and Class Information.

The !pci extension with the 0x100 flag shows the information stored within the PCI Configuration Space, please note this is a omitted output of one particular PCI bus.

There's three key points I would like to discuss in this section. BARs, Classes and Bus Enumeration. I will start with Bus Enumeration which we can obtain with the !pcitree extension.

There are three different methods of Bus Enumeration, which will described shortly. Bus Enumeration is a method of checking any devices are connected to the PCI bus. This is required since the BIOS and the operating system do not have any method of their own to be able to check if a device is connected. Bus Enumeration is performed by checking the Vendor ID and Device ID Registers, and then returning F's (Hexadecimal) and 1's (Binary) if the reading of the Function and Register fail.

Now, let's briefly look at the concept of BARs or (Base Address Registers). BARs are used to define the memory addresses and I/O port addresses (memory-mapped I/O) used by the PCI devices. These registers define the size of memory to be allocated, and where it can be allocated. Typically, Memory BARs must be in physical memory, whereas, I/O BARs do not have this restriction.

0x02 in the Type field means the address is 32-bit, 0x00 means the address is 64-bit and 0x01 is Reserved.

The last concept I'm going to discuss involves Classes. Classes are separated into Class Code, Sub Class Code and Prog IF (Programmable Interface) and are used to identify the type of device and it's potential functions.

There a larger number of Sub Classes and Functions, so I'll have to omit the complete listing, and refer you to the PCI OSDev Wiki article.

Additional Reading

PCI - OSDev Wiki

PCI Configuration Space

PCI Internals

OSR NtDev List - PCI Function Number

Monday 20 January 2014

Algorithms and Data Structures - Calculating Insertion and Deletion Time Complexity of Singly Linked Lists

Prerequisites:

- Knowledge of C/C++

- Knowledge of Calculus/Algebra

Time Complexity and O(n)

You could consider this topic as a Computer Science/Programming topic. However, I always consider Computer Science and Programming to be two different topics rather than the same thing, even though they both share the same programming principles, such as understanding how to write code to begin with. Computer Science is more like Applied Mathematics, and is more theory based, whereas, Programming is more practical and using a language or multiple languages as tools to create real-life programs.

I've written a simple program which creates nodes within a linked list, and then walks this linked list when the user has decided they do not wish to insert any more nodes into the list. I've commented the code where necessary so it will be easier to understand. We'll then calculate the time complexity of the algorithm used to insert the nodes within the linked list.

Firstly, let's consider and investigate the concept of time complexity and what it means in terms of Computer Science. Time Complexity refers to the amount of time for a operation to complete, as a result of the input required. There are different forms of Time Complexity, and therefore different methods apply to the type of Time Complexity. For Singly Linked Lists, the Time Complexity is often regarded as Constant Time Complexity.

Constant Time Complexity or simply Constant Time, isn't affected by the size of the input, or the number of elements. For example, if we had a sorted linked list or array, it would only take one operation to find the element within the array. Although, the same can still apply, if the number of elements within the linked list was unsorted, but the number of elements within the linked list is known within advance. To simply, the number of elements within the linked list, does not affect the complexity of the insertions or deletions within the linked list. The Big O Notation for calculating constant time within a linked list is O(1). On the other hand, the Big O Notation for walking the list, is given by the O(n), which implies that the complexity for walking the list, increases with direct proportion to the number of elements within the linked list.

The Big-O Complexity Interactive Graph supports this point.

Singly Linked List Code (C++)

Let's examine this simple node structure. The structure has two members: node_data and next_node. The node_data is of a integer type, and represents any data stored within each node. The next_node is a pointer with a type of node (structure), this is used to point to the next node within the singly linked list.

Remember that linked lists start with a list head, which is essentially the beginning of the linked list.

The function will keep creating new nodes as long as the user wants to. The node_data can be defined by the user.

Once, the user has created all the desired nodes within the linked list, the walk_list function will be called.

References

Big O Notation

Time Complexity

Complexity Theory - Definitions of an algorithm running in polynomial time and strongly polynomial time

A Beginner's Guide to Big O Notation

How To Create a Linked List Using C/C++

- Knowledge of C/C++

- Knowledge of Calculus/Algebra

Time Complexity and O(n)

You could consider this topic as a Computer Science/Programming topic. However, I always consider Computer Science and Programming to be two different topics rather than the same thing, even though they both share the same programming principles, such as understanding how to write code to begin with. Computer Science is more like Applied Mathematics, and is more theory based, whereas, Programming is more practical and using a language or multiple languages as tools to create real-life programs.

I've written a simple program which creates nodes within a linked list, and then walks this linked list when the user has decided they do not wish to insert any more nodes into the list. I've commented the code where necessary so it will be easier to understand. We'll then calculate the time complexity of the algorithm used to insert the nodes within the linked list.

Firstly, let's consider and investigate the concept of time complexity and what it means in terms of Computer Science. Time Complexity refers to the amount of time for a operation to complete, as a result of the input required. There are different forms of Time Complexity, and therefore different methods apply to the type of Time Complexity. For Singly Linked Lists, the Time Complexity is often regarded as Constant Time Complexity.

Constant Time Complexity or simply Constant Time, isn't affected by the size of the input, or the number of elements. For example, if we had a sorted linked list or array, it would only take one operation to find the element within the array. Although, the same can still apply, if the number of elements within the linked list was unsorted, but the number of elements within the linked list is known within advance. To simply, the number of elements within the linked list, does not affect the complexity of the insertions or deletions within the linked list. The Big O Notation for calculating constant time within a linked list is O(1). On the other hand, the Big O Notation for walking the list, is given by the O(n), which implies that the complexity for walking the list, increases with direct proportion to the number of elements within the linked list.

The Big-O Complexity Interactive Graph supports this point.

Singly Linked List Code (C++)

|

| Figure 1 - Node Structure |

Remember that linked lists start with a list head, which is essentially the beginning of the linked list.

.JPG) |

| Figure 2 - create_node() Function |

The function will keep creating new nodes as long as the user wants to. The node_data can be defined by the user.

2.JPG) |

| Figure 3 - create_node() Function |

References

Big O Notation

Time Complexity

Complexity Theory - Definitions of an algorithm running in polynomial time and strongly polynomial time

A Beginner's Guide to Big O Notation

How To Create a Linked List Using C/C++

Saturday 18 January 2014

Internals of Direct Memory Access Part 2

This Part 2 of my tutorial about looking at how Direct Memory Access works on Windows, this part look at Bus Mastering which is the current and modern implementation of DMA.

With Bus Mastering, there is no concept of a controller, and therefore Direct Memory Access with Bus Mastering is naturally has better performance. Bus Mastering DMA is also referred to as First-Party Direct Memory Access.

The bus which becomes the Bus Master usually maintains full responsibility for maintaining information about the buffer lengths such as the base and the length of the physical buffer fragment. Remember that the bus only sees physical memory, which can be fragmented. Most devices will perform a transfer for each physical buffer fragment, reducing the overhead for the device but increasing the overhead for the transfers.

You may have heard about Scatter/Gather transfers used with drivers, this enables drivers to set up a chain of buffer fragments and then transfer each fragment as one DMA transaction. For example, you may be reading from one address buffer and then writing the data into multiple buffers. The opposite may also be true. Scatter/Gather operations are used with a Scatter/Gather Table which is comprises of data to be written to memory locations associated with a particular device. The GetScatterGatherList function creates a list for the associated device.

With Windows, there tends to be two types of operation methods: packet-based and common buffer. Before we look at Packet Based and Common Buffer based transfers, we need to understand the concept of Map Registers in association with DMA.

Map Registers

Map Registers serve the same concept as PTEs with Page Translation, but the bus' logical address space is translated to a physical address space present within physical memory (RAM). By using Map Registers, drivers are able to reference virtual logic address space rather than reference physical address space, however, remember that DMA still only uses physical memory addresses to complete transfers. Map Registers are a form of abstraction for driver developers.

Map Registers are supported by Windows and the WDM framework with the DMA_ADAPTER structure, and the IoGetDmaAdapter function. We can examine this structure in WinDbg. I'll also show the code for the function.

The more reliable method would be to the !dma extension with DMA Verification enabled for Driver Verifier.

The _DMA_OPERATIONS structure contains information all the associated functions which can be called for that physical device object. This structure is usually implemented as a table of pointers.

As you can see, the function will return a reference to the _DMA_ADAPTER structure. The Physical Device Object parameter is associated with the device wishing to obtain the address of the adapter structure, whereas, the Device Description parameter is a pointer to the _DEVICE_DESCRIPTION structure which describes the current attributes for the device. The Number of Map Registers parameter is the number of map registers which can be used for a DMA transfer.

The diagram below shows the correspondence between virtual memory and physical memory using Map Registers managed by the HAL.

Packet Based Transfer

DMA Packets are prepared and created by Windows, and these packets will be transferred to and from the physical device object. Each packet depends upon the availability of the Map Registers, and if not enough are available for the transfer, the packet will be added to a queue until there is enough Map Registers to complete the DMA transfer. GetScatterGatherList and PutScatterGatherList are generally used with Packet Based Transfers.

The number of available registers can be found in WinDbg with the _DMA_OPERATIONS structure.

The FreeMapRegisters member is a function pointer to the FreeMapRegisters function which frees the number of allocated map registers for the DMA transfer.

Common Buffer Transfer

Let's examine the concept behind Common Buffer transfers. Common Buffers consist of a allocated region of kernel memory address space, which can be used to transfer information between your driver object and device object.

The AllocateCommonBufferEx function is used to create a buffer space called the Common Buffer.

Common Buffers do not require Map Registers, and are created within physical address space of the associated DMA device, which is also physically contiguous.

Common Buffers can't be allocated at IRQL Level 2.

More Information - What is DMA (Part 4) - Common Buffers

DMA Attack:

DMA Attacks require access to physical memory, whereby the malicious code can access the kernel address space, device address space and registers and other important structures and data. In User-Mode, this access is protected by the MMU (Memory Management Unit) which will not allow User-Mode code direct access to such memory addresses. By someone writing malicious device drivers or Kernel Mode code, they will be able to access these important data structures, and either steal information or corrupt the operating system.

Although, any drivers used to run on Windows, will be need to be digitally signed with a certificate.

References:

The NT Insider: Driver Basics - DMA Concepts

With Bus Mastering, there is no concept of a controller, and therefore Direct Memory Access with Bus Mastering is naturally has better performance. Bus Mastering DMA is also referred to as First-Party Direct Memory Access.

The bus which becomes the Bus Master usually maintains full responsibility for maintaining information about the buffer lengths such as the base and the length of the physical buffer fragment. Remember that the bus only sees physical memory, which can be fragmented. Most devices will perform a transfer for each physical buffer fragment, reducing the overhead for the device but increasing the overhead for the transfers.

You may have heard about Scatter/Gather transfers used with drivers, this enables drivers to set up a chain of buffer fragments and then transfer each fragment as one DMA transaction. For example, you may be reading from one address buffer and then writing the data into multiple buffers. The opposite may also be true. Scatter/Gather operations are used with a Scatter/Gather Table which is comprises of data to be written to memory locations associated with a particular device. The GetScatterGatherList function creates a list for the associated device.

With Windows, there tends to be two types of operation methods: packet-based and common buffer. Before we look at Packet Based and Common Buffer based transfers, we need to understand the concept of Map Registers in association with DMA.

Map Registers

Map Registers serve the same concept as PTEs with Page Translation, but the bus' logical address space is translated to a physical address space present within physical memory (RAM). By using Map Registers, drivers are able to reference virtual logic address space rather than reference physical address space, however, remember that DMA still only uses physical memory addresses to complete transfers. Map Registers are a form of abstraction for driver developers.

Map Registers are supported by Windows and the WDM framework with the DMA_ADAPTER structure, and the IoGetDmaAdapter function. We can examine this structure in WinDbg. I'll also show the code for the function.

The more reliable method would be to the !dma extension with DMA Verification enabled for Driver Verifier.

The _DMA_OPERATIONS structure contains information all the associated functions which can be called for that physical device object. This structure is usually implemented as a table of pointers.

As you can see, the function will return a reference to the _DMA_ADAPTER structure. The Physical Device Object parameter is associated with the device wishing to obtain the address of the adapter structure, whereas, the Device Description parameter is a pointer to the _DEVICE_DESCRIPTION structure which describes the current attributes for the device. The Number of Map Registers parameter is the number of map registers which can be used for a DMA transfer.

The diagram below shows the correspondence between virtual memory and physical memory using Map Registers managed by the HAL.

Packet Based Transfer

DMA Packets are prepared and created by Windows, and these packets will be transferred to and from the physical device object. Each packet depends upon the availability of the Map Registers, and if not enough are available for the transfer, the packet will be added to a queue until there is enough Map Registers to complete the DMA transfer. GetScatterGatherList and PutScatterGatherList are generally used with Packet Based Transfers.

The number of available registers can be found in WinDbg with the _DMA_OPERATIONS structure.

The FreeMapRegisters member is a function pointer to the FreeMapRegisters function which frees the number of allocated map registers for the DMA transfer.

Common Buffer Transfer

Let's examine the concept behind Common Buffer transfers. Common Buffers consist of a allocated region of kernel memory address space, which can be used to transfer information between your driver object and device object.

The AllocateCommonBufferEx function is used to create a buffer space called the Common Buffer.

Common Buffers do not require Map Registers, and are created within physical address space of the associated DMA device, which is also physically contiguous.

Common Buffers can't be allocated at IRQL Level 2.

More Information - What is DMA (Part 4) - Common Buffers

DMA Attack:

DMA Attacks require access to physical memory, whereby the malicious code can access the kernel address space, device address space and registers and other important structures and data. In User-Mode, this access is protected by the MMU (Memory Management Unit) which will not allow User-Mode code direct access to such memory addresses. By someone writing malicious device drivers or Kernel Mode code, they will be able to access these important data structures, and either steal information or corrupt the operating system.

Although, any drivers used to run on Windows, will be need to be digitally signed with a certificate.

References:

The NT Insider: Driver Basics - DMA Concepts

Wednesday 15 January 2014

Understanding Memory Barriers

Memory Barriers in Code

Memory Barriers are used to enforce some kind of ordering on how the compiler or CPU execute memory ordering operations. Since most CPUs have some form of technology which is used to optimize the execution of code, the problem of out-of-order execution can occur in programs leading to unpredictable results. Each variable or data structure we create is referred to as a an object, and each object has a memory address and memory address load order. This load order defines which variables are written to and loaded first.

You probably will never notice this with program which has one thread, typically this is simply main. However, if you create two threads and have these two threads run concurrently, the question of which thread runs first raises? Although, we can add synchronization dispatcher objects to our code, the point of this blog post is to look at the concept of memory barriers and examine the Assembly code which is produced through binary analysis.

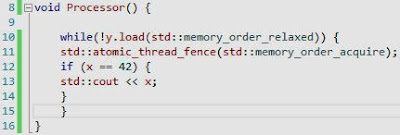

In the Main thread, I've created a global atomic boolean value called y, and have set it to false. Two separate additional threads are created, which have functions called Processor and Processor2.

The code for the Processor function is above, while y is not being read, we need to wait until the fence instruction has created a synchronization with relationship with the fence instruction in the other thread. When using fence instructions, there must be two different types of fence instructions (store and load) to generate a relationship otherwise we will not achieve the purpose of our synchronization.

The code for the Processor2 function, shows that the address of x will be stored or written with the value of 42. Again, x is a global variable, and is thus available to all parts of the program. We then insert a fence instruction between the storing of x and the y, this is used to ensure that the value of y isn't read until the memory fence has been acquired in the other thread.

If the fence instruction notices the store after the release fence instruction, then the acquire fence instruction will form a synchronize with relationship with that release fence instruction.

It's easier to understand with the insertion of a assert function to our code in the the Main thread. The assert function will be controlled by the value of a global atomic variable called z. If this function is incremented by 1, then the assert function will not be called.

Once the two threads have synchronized together, the value of x will be evaluated and then the value of z will be incremented to 1, ensuring that the assert function isn't called and terminates the program.

You may have heard or seen people talk about the use of the volatile keyword. This keyword is used to tell the hardware that it can modify this value, and this brings in the term of modification ordering. The use of the volatile keyword will alter the modification ordering of executed instructions. See the references section for more information.

Memory Barriers Internals

I assume you know generally how the cache works, and what cache hits and misses are. In this case, I'm going to discuss some of the features of the cache and then explain how memory barriers relate to the operation of the CPU Cache.

The above is the general structure of the cache, and its relationship to memory. Initially, each there will be a cache miss, since no longer is present within the cache, however, once this data has been gathered from memory and placed into the cache, a cache hit will occur for each sequent read on that same object.

Although, the cache is a limited resource and therefore will not hold every possible address of all the objects being read from memory. When the cache becomes full, and the processor is attempting to write new data to the the cache, then cache capacity misses will result. These cache misses, as the name suggests, are a result of lack of space within the cache.

The cache is organized as hash table with fixed-size buckets called sets, and no chaining. The hash table is a data structure used to implement and construct a associative array, each hash function will be able to match a key to the correct value within the array. Essentially, the key is used to index into the bucket stored in the hash table. The key would the name of our object.

Looking at the above example of a cache, we can see there is currently 16 different buckets or sets. The hash function is based upon a algorithm which knows the name of the key and the size of the array. Furthermore, hash tables used with the cache do not support chaining, and therefore hash collisions are always possible. Separate Chaining is the concept that a index can have multiple entries in a linked list, this avoids the problem of hash collisions.

When the cache has become full, the data within one of the sets will need to be removed, and the new the data will need to be added. On the other hand, when data is being wrote by a different processor to another processor's cache, the operation will result in unnecessary stalling of hundreds of instructions.

The CPU 0 sends a message to CPU 1's cache line, the invalidate message contains the physical address of the cache line, and causes the processor to remove the data, to enable CPU 0 to be able to write to that set within the cache. As you can see, this is a expensive operation and there is a better method. The answer lies with Store Buffers, whereby a processor can store it's write instructions within it's buffer, and continue executing until it's appropriate to make the write to the other cache, but this leads to the problems of memory ordering and out-of-order execution.

Due to the problem of memory ordering and store buffers, memory barriers were introduced to enable programmers to be able to perform synchronization at level which was close to the hardware of the system.

Memory Barriers Disassembly

The main thread of the program begins at mainCRTStartup, which jumps to a block of code which contains the necessary thread creation functions. By jumping to the location pointed to by the jump instruction, we can see how our thread is started. Please note all default compiler linker options are enabled, so you may notice things like security cookies etc.

We push some integer variable onto the stack stored in the register called ebp, this register value is then moved into the esp register, which is our current stack frame pointer. We then call some functions, and remove the value of the ebp register from the stack. The function then returns.

Most of the fence instructions, and thread functions I was able to find, we can jump to where these instructions begin, and examine the Assembly code used to produce them.

Let's look at the jump instruction for the loading of a fence instruction (std::atomic_load_explicit), and have a quick look at a few of the details.

We can see that function takes the atomic variable and the memory ordering constant, which are later pushed onto the stack.

References:

volatile (C++)

std::memory_order

Hash Table

Memory Barriers : a Hardware View for Software Hackers

Multiprocessor Considerations for Kernel-Mode Drivers

Memory Barriers are used to enforce some kind of ordering on how the compiler or CPU execute memory ordering operations. Since most CPUs have some form of technology which is used to optimize the execution of code, the problem of out-of-order execution can occur in programs leading to unpredictable results. Each variable or data structure we create is referred to as a an object, and each object has a memory address and memory address load order. This load order defines which variables are written to and loaded first.

You probably will never notice this with program which has one thread, typically this is simply main. However, if you create two threads and have these two threads run concurrently, the question of which thread runs first raises? Although, we can add synchronization dispatcher objects to our code, the point of this blog post is to look at the concept of memory barriers and examine the Assembly code which is produced through binary analysis.

In the Main thread, I've created a global atomic boolean value called y, and have set it to false. Two separate additional threads are created, which have functions called Processor and Processor2.

The code for the Processor function is above, while y is not being read, we need to wait until the fence instruction has created a synchronization with relationship with the fence instruction in the other thread. When using fence instructions, there must be two different types of fence instructions (store and load) to generate a relationship otherwise we will not achieve the purpose of our synchronization.

The code for the Processor2 function, shows that the address of x will be stored or written with the value of 42. Again, x is a global variable, and is thus available to all parts of the program. We then insert a fence instruction between the storing of x and the y, this is used to ensure that the value of y isn't read until the memory fence has been acquired in the other thread.

If the fence instruction notices the store after the release fence instruction, then the acquire fence instruction will form a synchronize with relationship with that release fence instruction.

It's easier to understand with the insertion of a assert function to our code in the the Main thread. The assert function will be controlled by the value of a global atomic variable called z. If this function is incremented by 1, then the assert function will not be called.

Once the two threads have synchronized together, the value of x will be evaluated and then the value of z will be incremented to 1, ensuring that the assert function isn't called and terminates the program.

You may have heard or seen people talk about the use of the volatile keyword. This keyword is used to tell the hardware that it can modify this value, and this brings in the term of modification ordering. The use of the volatile keyword will alter the modification ordering of executed instructions. See the references section for more information.

Memory Barriers Internals

I assume you know generally how the cache works, and what cache hits and misses are. In this case, I'm going to discuss some of the features of the cache and then explain how memory barriers relate to the operation of the CPU Cache.

The above is the general structure of the cache, and its relationship to memory. Initially, each there will be a cache miss, since no longer is present within the cache, however, once this data has been gathered from memory and placed into the cache, a cache hit will occur for each sequent read on that same object.

Although, the cache is a limited resource and therefore will not hold every possible address of all the objects being read from memory. When the cache becomes full, and the processor is attempting to write new data to the the cache, then cache capacity misses will result. These cache misses, as the name suggests, are a result of lack of space within the cache.

The cache is organized as hash table with fixed-size buckets called sets, and no chaining. The hash table is a data structure used to implement and construct a associative array, each hash function will be able to match a key to the correct value within the array. Essentially, the key is used to index into the bucket stored in the hash table. The key would the name of our object.

Looking at the above example of a cache, we can see there is currently 16 different buckets or sets. The hash function is based upon a algorithm which knows the name of the key and the size of the array. Furthermore, hash tables used with the cache do not support chaining, and therefore hash collisions are always possible. Separate Chaining is the concept that a index can have multiple entries in a linked list, this avoids the problem of hash collisions.

When the cache has become full, the data within one of the sets will need to be removed, and the new the data will need to be added. On the other hand, when data is being wrote by a different processor to another processor's cache, the operation will result in unnecessary stalling of hundreds of instructions.

The CPU 0 sends a message to CPU 1's cache line, the invalidate message contains the physical address of the cache line, and causes the processor to remove the data, to enable CPU 0 to be able to write to that set within the cache. As you can see, this is a expensive operation and there is a better method. The answer lies with Store Buffers, whereby a processor can store it's write instructions within it's buffer, and continue executing until it's appropriate to make the write to the other cache, but this leads to the problems of memory ordering and out-of-order execution.

Due to the problem of memory ordering and store buffers, memory barriers were introduced to enable programmers to be able to perform synchronization at level which was close to the hardware of the system.

Memory Barriers Disassembly

The main thread of the program begins at mainCRTStartup, which jumps to a block of code which contains the necessary thread creation functions. By jumping to the location pointed to by the jump instruction, we can see how our thread is started. Please note all default compiler linker options are enabled, so you may notice things like security cookies etc.

We push some integer variable onto the stack stored in the register called ebp, this register value is then moved into the esp register, which is our current stack frame pointer. We then call some functions, and remove the value of the ebp register from the stack. The function then returns.

Most of the fence instructions, and thread functions I was able to find, we can jump to where these instructions begin, and examine the Assembly code used to produce them.

Let's look at the jump instruction for the loading of a fence instruction (std::atomic_load_explicit), and have a quick look at a few of the details.

We can see that function takes the atomic variable and the memory ordering constant, which are later pushed onto the stack.

volatile (C++)

std::memory_order

Hash Table

Memory Barriers : a Hardware View for Software Hackers

Multiprocessor Considerations for Kernel-Mode Drivers

Subscribe to:

Posts (Atom)

.JPG)

.jpg)